|

|

Recentralization May be a Good Thing.If organizations aren't cognizant of these issues, deployment and management will become more complex and more likely to hamper expansion. There are several ways to address these issues. One possibility is that vendors will begin to package their distributed software development environments with sophisticated systems and application management facilities in order to prepare IT organizations for the deployment and management of applications. However, if the problems I outlined in the software distribution, performance management, troubleshooting, and code management categories go unresolved, there is a good chance that IT organizations will follow the path of least resistance and return to centralization. They may decide that supporting distributed environments is too complex. This realization may also coincide with the emergence of powerful, parallel processing hardware that could help recentralize computing resources at a fraction of the cost of mainframe computers. Companies would then be in a position to bring software back to their IT organizations and give users a new generation of powerful terminals to access their applications. With the recentralization of applications, system and application management becomes centralized, too. I believe that some recentralization is probably a good thing, because, from a consolidated point of view, certain management issues are easier to handle. However, organizations should recentralize their computing environments only when it makes sense. For example, if a corporation's repository, key business rules, and data do not have to be moved frequently, they can all be centralized on a mainframe. Distributed computing, on the other hand, should be applied to processes and applications that are closer to an organization's actual business users. This might include copies of key data and business rules to which users need frequent access, complex graphics, and anything having to do with ease of use and the user interface. Recentralization might be inevitable. Plus, if it's applied appropriately, it just might be a good thing. But there's a risk if organizations move too far toward centralization. To be effective, organizations need a good balance between centralization and distribution. Concepts of ESD Control

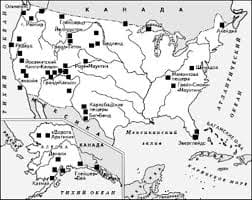

With product performance, reliability and quality at stake, controlling electrostatic discharge (ESD) in the electronics environment can seem a formidable challenge. However, in designing and implementing ESD control programs, the task becomes somewhat simpler and more focused if it is approached with just four basic concepts of control. When approaching this task, we also need to keep in mind the ESD corollary to Murphy's Law, "No matter what we do, static charge will try to find a way to discharge." The first control concept is to design products and assemblies to be as immune as is reasonable to the effects of electrostatic discharge. This involves such steps as using less-static-sensitive devices or providing appropriate input protection on devices, boards, assemblies and equipment. For engineers and designers, the paradox is that advancing product technology requires smaller and more complex geometries that often are more susceptible to ESD. Knowing that product design isn't the whole answer, the second concept of control is to eliminate or reduce the generation and accumulation of electrostatic charge in the first place. It's fairly basic: If there is no charge, there will be no discharge. We begin by reducing as many static-generating processor materials as possible from the work environment. Some examples are friction and common plastics. Keeping other processes and materials at the same electrostatic potential is also an important factor. Electrostatic discharge does not occur between materials that are kept at the same potential or are kept at zero potential. In addition, by providing a ground path, charge generation and accumulation can be reduced. Wrist straps, flooring and work surfaces are all examples of effective methods that can be used to get rid of electrostatic charges by grounding. We simply can't eliminate all generation of static in the environment. Thus, the third concept of control is to safely dissipate or neutralize those electrostatic charges that do exist. Again, proper grounding plays a major role. For example, workers who "carry" a charge into the work environment can rid themselves of that charge when they attach a wrist strap or when they step on an ESD floor mat while wearing ESD control footwear. The charge goes to ground rather than being discharged into a sensitive part. To prevent damaging a charged device, the rate of discharge can be controlled with static-dissipative materials. However, for some objects, such as common plastics and other insulators, grounding does not remove an electrostatic charge because there are no conduction pathways. To neutralize charges on these types of materials, ionization, either for localized sections or across the whole area, may prove to be the answer. The ionization process generates negative or positive ions that are attracted to the surface of a charged object, thereby effectively neutralizing the electrostatic charge. The final ESD control concept is to prevent discharges that do occur from reaching susceptible parts and assemblies. One way to do this is to provide parts and assemblies with proper grounding or shunting that will "carry" any discharge away from the product. A second method is to package and transport susceptible devices in appropriate packaging and materials-handling products. These materials effectively shield the product from electrostatic charge, as well as reduce the generation of charge caused by any movement of product within the container. While these four concepts may seem rather basic, they can aid in the selection of appropriate materials and procedures to use in effectively controlling electrostatic discharge. In most circumstances, effective programs will involve all four of these concepts. No single procedure or product will be able to do the whole job. In developing electrostatic discharge control programs, we need to identify the devices that are susceptible and to what level. Then, we must determine which of the four concepts will protect these devices. Finally, we can select the combination of procedures and materials that will fulfill the concepts of control. In future columns, these concepts will be discussed in greater detail, focusing on various materials and procedures that meet protection goals. Other related ESD topics, such as auditing, training and failure mechanisms, will also be covered. (Circuits Assemblies) Needed: An admiral of the "Nano Sea"? To accelerate the exploitation of structures with dimensions <100nm, the governments of the US, Japan, and the European Union have established Nanotechnology Initiatives. The claim is that matter has radically different properties when its characteristic dimensions are between 1 and 100nm and that this new realm offers all sorts of opportunities. Taxpayer funding, of course, will be needed for precompetitive R&D to realize this potential, and nanotechnology promoters have been busily courting government bureaucrats. At a nanotechnology conference last summer, ethicist George Khushf of the U. of South Carolina pointed something out: If the nano realm really is so different, we cannot rationally evaluate either the opportunities or the dangers of exploring it. So how can we decide whether a society should support such an effort or not? If nanotechnology were just an extension of some known trend, then we could extrapolate the known costs, benefits, and risks. But the funding needed for such incremental progress should come from those who have reasonable expectation of benefit, and dramatic new government initiatives wouldn't be needed. On the other hand, if nanotechnology is radically different, then the risks are unknowable and the precautionary principle militates against any exploration at all. According to Prof. Khushf, all discussions of exploring the radically new devolve into this paradox: Claiming that something really is different implies that its dangers are unknown and necessarily scares someone into bitter opposition. Claiming that the dangers are acceptable implies that there is nothing really new to find. A comfortable, risk-adverse and consensus-seeking culture just cannot rationally decide to explore! Listening to Prof. Khushf, I thought of the prototypical government-funded exploration program, one that has produced immense benefits for some, but which remains controversial 500 years later: the funding of Christopher Columbus by the new government of Spain, supposedly to find an alternate route to the Orient. How could that have been rationally justified in 1492? Of course it wasn't. The entire project was a gamble based on misrepresentation, both by Columbus and by his sponsors. Most Americans know the legend of Columbus, but have not thought through its context and inconsistencies. Much of what actually went on is lost in the mist of time. However, it is clear that Columbus thought the world was round, and was navigator enough to estimate its circumference (in sailing time) using a sextant and the method demonstrated by Eratosthenes of Alexandria in the 3rd century B.C. (For a sphere, the circumference pole-to-pole is the same as around the equator.) Marco Polo and the Arab traders had a fair idea how far east they had traveled to get to Asia. Subtracting that from Eratosthenes' estimate left leagues and leagues more ocean than could be traversed by Columbus's poorly provisioned little fleet. In order to get funded, he had dramatically underestimated the distance west to Asia! Why? Because he had confidence there was something valuable out there, even if didn't know quite what! Columbus had very carefully gotten himself named viceroy of any lands discovered as well as admiral of the Ocean Sea. He hoped to return with wealth and honor, no matter what, but he had to claim he was sailing into the known, not the unknown! The sponsors had their own reasons to invest in the enterprise. For one thing, they wanted the government to control it, rather than let restive New Christian bankers claim lucrative colonies and trade routes. The Portuguese had done better than Spain in the exploration business; funding Columbus to go west seemed an imaginative way to propitiate Spain's exploration lobby. Then there was the potential for converts to Christianity…. What happened? Well, there were islands out there all right, with Indians, but they knew nothing of India or China or Japan! Columbus found new foods and smokable herb, but no gold or nutmeg. The flagship sank. At least forty sailors were left behind, to be found dead on the second expedition. The returning sailors probably brought syphilis to Europe. Columbus lived in denial and controversy for the rest of his life. Still, Spain found itself in possession of a great empire. Eventually, gold was found, as well as chocolate, chili peppers, and a really long route to the Spice Islands. Would the world have been better off if Columbus had been denied funding? It depends; the Aztec and Inca elites certainly would have been happier. Could the outcome have been improved by rational ethical discussion beforehand? No way! Atomic Force Microscopy 1. INTRODUCTION.The new technology of scanning probe microscopy has created a revolution in microscopy, with applications ranging from condensed matter physics to biology. This issue of ScienceWeek presents only a glimpse of the many and varied applications of atomic force microscopy in the sciences.The first scanning probe microscope, the scanning tunneling microscope, was invented by G. Binnig and H. Rohrer in the 1980s (they received the Nobel Prize in Physics in 1986), and the invention has been the catalyst of a technological revolution. Scanning probe microscopes have no lenses. Instead, a "probe" tip is brought very close to the specimen surface, and the interaction of the tip with the region of the specimen immediately below it is measured. The type of interaction measured essentially defines the type of scanning probe microscopy. When the interaction measured is the force between atoms at the end of the tip and atoms in the specimen, the technique is called "atomic force microscopy". When the quantum mechanical tunneling current is measured, the technique is called "scanning tunneling microscopy". These two techniques, atomic force microscopy (AFM) and scanning tunneling microscopy (STM) have been the parents of a variety of scanning probe microscopy techniques investigating a number of physical properties. [Note: In general, "quantum mechanical tunneling" is a quantum mechanical phenomenon involving an effective penetration of an energy barrier by a particle resulting from the width of the barrier being less than the wavelength of the particle. If the particle is charged, the effective particle translocation determines an electric current. In this context, "wavelength" refers to the de Broglie wavelength of the particle, which is given by L = h/mv, with (L) the wavelength of the moving particle, (h) the Planck constant, (m) the mass of the particle, and (v) the velocity of the particle.] ATOMIC FORCE MICROSCOPY IN BIOLOGY C. Wright-Smith and C.M. Smith (San Diego State College, US) present a review of the use of atomic force microscopy in biology, the authors making the following points: Since its introduction in the 1980s, atomic force microscopy (ATM) has gained acceptance in biological research, where it has been used to study a broad range of biological questions, including protein and DNA structure, protein folding and unfolding, protein-protein and protein-DNA interactions, enzyme catalysis, and protein crystal growth. Atomic force microscopy has been used to literally dissect specific segments of DNA for the generation of genetic probes, and to monitor the development of new gene therapy delivery particles.Atomic force microscopy is just one of a number of novel microscopy techniques collectively known as "scanning probe microscopy" (SPM). In principle, all SPM technologies are based on the interaction between a submicroscopic probe and the surface of some material. What differentiates SPM technologies is the nature of the interaction and the means by which the interaction is monitored.Atomic force microscopy produces a topographic map of the sample as the probe moves over the sample surface. Unlike most other SPM technologies, atomic force microscopy is not dependent on the electrical conductivity of the product being scanned, and ATM can therefore be used in ambient air or in a liquid environment, a critical feature for biological research. The basic atomic force microscope is composed of a stylus-cantilever probe attached to the probe stage, a laser focused on the cantilever, a photodiode sensor (recording light reflected from the cantilever), a digital translator recorder, and a data processor and monitor.Atomic force microscopy is unlike other SPM technologies in that the probe makes physical (albeit gentle) contact with the sample. The cornerstone of this technology is the probe, which is composed of a surface-contacting stylus attached to an elastic cantilever mounted on a probe stage. As the probe is dragged across the sample, the stylus moves up and down in response to surface features. This vertical movement is reflected in the bending of the cantilever, and the movement is measured as changes in the light intensity from a laser beam bouncing off the cantilever and recorded by a photodiode sensor. The data from the photodiode is translated into digital form, processed by specialized software on a computer, and then visualized as a topological 3-dimensional shape. ON SCANNING PROBE MICROSCOPY The invention and development of scanning probe microscopy has taken the ability to image matter to the atomic scale and opened fresh perspectives on everything from semiconductors to biomolecules, and new methods are being devised to modify and measure the microscopic landscape in order to explore its physical, chemical, and biological features.In scanning tunneling microscopy, electrons quantum mechanically "tunnel" between the tip and the surface of the sample. This tunneling process is sensitive to any overlap between the electronic wave functions of the tip and sample, and depends exponentially on their separation. The scanning tunneling microscope makes use of this extreme sensitivity to distance. In practice, the tip is scanned across the surface, while a feedback circuit continuously adjusts the height of the tip above the sample to maintain a constant tunneling current. The recorded trajectory of the tip creates an image that maps the electronic wave functions at the surface, revealing the atomic landscape in fine detail.The most widely used scanning probe microscopy technique, one which can operate in air and liquids, is atomic force microscopy. In this technique, a tip is mounted at the end of a soft cantilever that bends when the sample exerts a force on the tip. By optically monitoring the cantilever motion it is possible to detect extremely small chemical, electrostatic, or magnetic forces which are only a fraction of those required to break a single chemical bond or to change the direction of magnetization of a small magnetic grain. Applications of atomic force microscopy have included in vitro imaging of biological processes. In general, the various techniques of scanning probe microscopy have now been applied to high-resolution spectroscopy, the probing of nanostructures, measurements of forces in chemistry and biology, the production of deliberate movements of small numbers of atoms, and the use of precision lithography as a tool for making nanometric-sized electronic devices. The authors conclude: "The scanning probe microscope has evolved from a passive imaging tool into a sophisticated probe of the nanometer scale. These advances point to exciting opportunities in many areas of physics and biology, where scanning probe microscopes can complement macroscopically averaged measurement techniques and enable more direct investigations. More importantly, these tools should inspire new approaches to experiments in which controlled measurements of individual molecules, molecular assemblies, and nanostructures are possible." (The Scientist)   Система охраняемых территорий в США Изучение особо охраняемых природных территорий(ООПТ) США представляет особый интерес по многим причинам...  Что будет с Землей, если ось ее сместится на 6666 км? Что будет с Землей? - задался я вопросом...  ЧТО И КАК ПИСАЛИ О МОДЕ В ЖУРНАЛАХ НАЧАЛА XX ВЕКА Первый номер журнала «Аполлон» за 1909 г. начинался, по сути, с программного заявления редакции журнала...  ЧТО ПРОИСХОДИТ, КОГДА МЫ ССОРИМСЯ Не понимая различий, существующих между мужчинами и женщинами, очень легко довести дело до ссоры... Не нашли то, что искали? Воспользуйтесь поиском гугл на сайте:

|